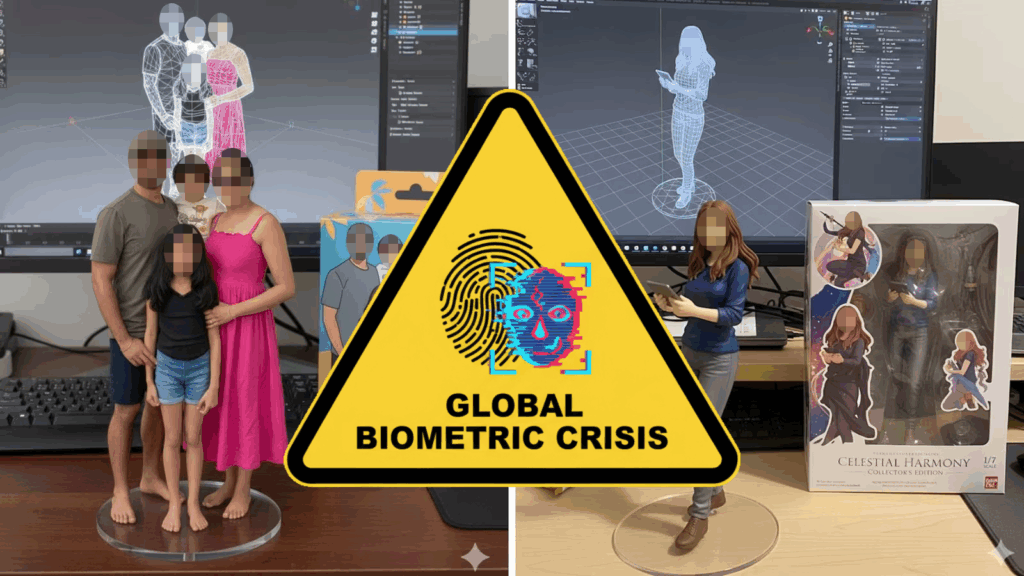

Imagine this: it’s Sunday evening and your Instagram feed is flooded with stunning 3D figurines that look exactly like your friends. Glossy skin, perfect proportions, charmingly packaged like collectible toys. “Made with Nano Banana AI!” the caption gleams. Within minutes, you’ve uploaded your own selfie to Google’s Gemini tool and just like that your digital doppelganger is born.

But what if this innocent fun has quietly turned into 2025’s most dangerous cybersecurity crisis?

This viral phenomenon, known as the Nano Banana trend, is creating the largest voluntary biometric database in human history and most people in North America, Europe and globally have no idea what they’ve just given away.

The Explosion of Nano Banana: When “Free” Comes at a Cost

Powered by Google’s Gemini 2.5 Flash Image tool, Nano Banana is a viral workflow that turns selfies into stylized 3D avatars. It has taken global social media by storm, generating over 200 million images worldwide in just a few weeks. From Silicon Valley professionals crafting corporate avatars on LinkedIn to TikTok users creating elaborate fantasy personas, the process is dangerously simple:

- Upload any selfie to Google AI Studio for free

- Type creative prompts like “vintage Hollywood star”, “anime figurine” or “cyberpunk warrior”

- Instantly get a hyper-realistic 3D avatar seconds later

- Share everywhere and watch your engagement soar

Looks harmless, right? But beneath this digital glamour lies a looming privacy nightmare.

Privacy Laws in a Global Gray Zone

Whether under Europe’s GDPR, California’s CCPA, Canada’s PIPEDA, or other national laws, privacy regulations seem robust on paper. Yet, AI-generated biometric avatars occupy a legal grey area. When you create a Nano Banana figurine, you aren’t just sharing a photo – you’re producing derivative biometric data that traditional laws may not cover.

Dr. Ann Cavoukian, former Privacy Commissioner of Ontario, warns of “consent fatigue” users clicking “agree” without realizing they’re licensing their biological identity for AI training and commercial use indefinitely.

What You’re Really Sharing: Your Biometric Fingerprint

Every photo you upload carries what cybersecurity experts call a biometric fingerprint: your unique facial geometry, skin texture, micro-expressions, body proportions, even behavioral patterns like how you hold your phone or typical photo angles.

Here’s what you may be handing over:

- Precise GPS coordinates embedded in image metadata

- Device fingerprinting data (phone model, camera specs, OS version)

- Behavioral biometrics and habitual traits

- Social network mapping (who appears in your pictures and relationship dynamics)

- Psychological profiling insights revealed by your creative prompts

MIT Technology Review estimates the value of a single high-quality facial biometric profile at $15-25 to data brokers and when combined with behavioral data, that value skyrockets beyond $100 per person. Meanwhile, Google’s privacy policy claims images are deleted after processing, but experts warn of backup copies, AI training datasets and potential government data requests preserving your biometric data indefinitely.

The Addictive Psychology Driving the Trend

Why is Nano Banana so irresistible in Western culture? It exploits our obsession with personal branding and social media validation. Unlike ordinary selfies, AI-generated self-portraits trigger stronger dopamine responses, creating what researchers call “digital narcissism amplification.”

Millennial and Gen-Z users, raised on “freemium” digital services, often overlook the risks until they find their face in a deepfake video they never approved.

The Criminal Ecosystem: How Your Face Becomes a Weapon

Forbes and cybersecurity firms reveal that hackers are exploiting viral AI trends to fuel identity theft, especially targeting affluent economies across Asia, the Americas, and Europe.

- Romance Scam Industrialization: Your facial data powers synthetic profiles used on Tinder, Bumble or Hinge or impersonate “financial advisors” in investment scams. Deepfake video calls compromise corporations in business email fraud.

- Cryptocurrency and Financial Fraud: Multiple biometric profiles are combined to create “synthetic identities” that bypass KYC (Know Your Customer) on crypto exchanges and digital banks, fueling money laundering and securities fraud.

- Political and Corporate Espionage: AI-crafted disinformation campaigns leverage stolen images to increase trust among voters and corporate targets by appearing ultra-local and relatable.

According to Kaspersky’s Global Threat Report:

- Smartphone biometric attacks rose 29% in developed markets

- AI-enhanced phishing targeting high-net-worth individuals jumped 156%

- Deepfake enabled fraud losses reached $2.8 billion in US and EU markets

Google’s SynthID Watermark: A False Sense of Security

Google proudly touts that every Nano Banana image carries an invisible SynthID watermark to identify AI-generated content. But experts are skeptical: “Watermarking is like putting a ‘Fragile’ sticker on a package – it only works if people respect it,” says Professor Hany Farid of UC Berkeley. The detection tools needed to read SynthID aren’t public and watermarks can be manipulated or removed within seconds.

The University of Maryland’s Soheil Feizi puts it bluntly: “Current watermarking technology is about as reliable as asking criminals to wear name tags.”

Real Stories of Digital Fun Turning into Damage

- A Fortune 500 CFO’s Nano Banana avatar was weaponized in a deepfake business email compromise, leading to a $2.3 million fraudulent transfer, with only 12% recovery.

- A Toronto marketing exec discovered her avatar was used in 47 fake dating profiles across North America – ruining her relationships and reputation.

- A Denver family’s Nano Banana creations were used in personalized scams targeting elderly relatives, resulting in a $15,000 loss and lasting emotional trauma.

The Stark Statistics You Can’t Ignore

| Statistic | Figure |

| Nano Banana images generated | 200+ million worldwide (78% from NA and Europe) |

| Smartphone biometric attacks | +29% in OECD countries |

| Identity theft losses | $78 billion in North America and Europe (2024) |

| Deepfake fraud attempts | 89% leveraged biometric data |

| Corporate BEC losses | $15.7 billion in 2025 (developed markets) |

What You Can Do to Protect Yourself – Starting Today

For Individuals:

- Audit your AI image uploads and delete any sensitive images

- Use metadata removal tools (ImageOptim for Mac, Photo Exif Editor for Android)

- Enable strict privacy controls on your device and apps

- Monitor your digital footprint with Google Alerts and “Have I Been Pwned”

- Consider cyber insurance for identity theft coverage

For Parents and Families:

- Talk openly about digital permanence and risks

- Create family rules about AI tool usage

- Use parental controls like iOS Screen Time, Android Family Link

- Teach critical thinking about “free” services and data privacy

For Businesses and Organizations:

- Update employee policies on AI and viral image usage

- Deploy AI deepfake detection solutions (from Sensity, Microsoft, etc.)

- Train staff using narrative-driven cybersecurity awareness

- Prepare Incident Response plans for AI-related fraud

- Review cyber liability insurance coverage

The Uncomfortable Truth: We’re in a Massive Experiment

Nano Banana isn’t just a viral art tool, it’s a tectonic shift in digital identity, privacy and data consent, especially in the West. Millions have willingly surrendered their biometric essence to algorithms and unknown hands. This uncontrolled experiment on human privacy has no “undo” button.

The Burning Question: Why Is It Free?

Powerful AI tools like Nano Banana come “free” because you’re not the customer, you’re the product. Your biometric data, behavior and creative choices generate exponentially more value than the cost to produce your glossy 3D figurine.

The September 2025 Reckoning

As this viral phenomenon concludes its explosive rise, the cybersecurity community delivers a clear message: fun and fraud are merging. Ignorance is no longer bliss. Your next social media post could decide whether you remain a digital citizen or become a digital commodity.

Before you share your next AI-generated selfie, ask yourself: Will you be a proud digital citizen or just another face sold to the highest bidder? Protect your identity. Protect your future. The time to act is now.

References

Cavoukian, Ann. “Privacy by Design: The 7 Foundational Principles.” Privacy by Design Foundation. https://www.privacybydesign.ca

MIT Technology Review. “The Hidden Economy of Biometric Data.” MIT Technology Review, 2025.

Stanford Human-Computer Interaction Lab. “Digital Narcissism and AI-Generated Self-Portraits Study.” Stanford University, 2025.

Kaspersky. “Attacks on smartphones increased in the first half of 2025.” Kaspersky Press Release, September 4, 2025. https://www.kaspersky.com/about/press-releases/kaspersky-report-attacks-on-smartphones-increased-in-the-first-half-of-2025

Google AI. “SynthID: Tools for watermarking and detecting LLM-generated content.” Google AI Developer Documentation. https://ai.google.dev/responsible/docs/safeguards/synthid

Farid, Hany. “Watermarking ChatGPT, DALL-E and Other Generative AIs Could Help Protect Against Fraud and Misinformation.” UC Berkeley School of Information, March 26, 2023. https://www.ischool.berkeley.edu/news/2023/hany-farid-watermarking-chatgpt-dall-e-and-other-generative-ais-could-help-protect-against-fraud-and-misinformation

Feizi, Soheil. “Researchers Tested AI Watermarks—and Broke All of Them.” University of Maryland TRAILS, October 18, 2023. https://www.trails.umd.edu/news/researchers-tested-ai-watermarksand-broke-all-of-them